Co-authored by Behzad Fatahi and Karen Whelan (Faculty of Engineering and IT)

Prefer to listen first, read later? This video podcast offers an alternative experience and highlights the key themes in our blog. Read on to see how we worked with our AI teammate to get it done….

As higher education has been working through the implications of Generative AI (GenAI) for assessment, much of the conversation has been focussed on security. Although education regulators such as TEQSA have framed GenAI as both opportunity and challenge, they are also signalling that a shift from an educative to a more regulatory approach is on the way.

Meanwhile, GenAI is transforming the way professionals work. In this evolving transition, assessments are needed that are not only secure, but also valid and aligned with industry practice, balancing both regulatory expectations and educational integrity. The exclusion of GenAI risks training students in tasks that AI already performs well, rather than preparing them to engage critically and productively with it. Industry is moving quickly towards collaborative AI use – higher education must follow.

Assurance of learning (with authenticity)

Supervised, paper-based exams or other controlled assessment conditions can help assure security and demonstrate some types of disciplinary knowledge, but they are also time- and cost-intensive and do not cater to students with different accessibility needs. More importantly, we must ask whether simply verifying students’ individual work without allowing collaboration with AI really prepares them for the workplace.

To be meaningful, assessments must be valid, reliable, equitable, efficient, and authentic, and they must align with course learning outcomes (CLOs). A secured assessment that fails to measure intended learning outcomes is ultimately of little value. Moreover, assessment is a learning process, not just a final submission. Limiting it to secured end-point tasks misses chances for feedback, reflection, and deeper skill development.

As we consider different learning contexts and how they prepare students for future professions, some require a stronger emphasis on validating disciplinary knowledge, while others will place greater weight on the ability to use GenAI effectively. Professional and accrediting bodies such as Engineers Australia and their equivalents in other fields can guide universities in striking this balance to ensure assessment practices remain aligned with evolving industry needs.

Higher education has navigated similar transitions before. Closed-book exams once dominated until authentic practice demanded access to resources; open-book exams are now common. Likewise, calculators and programmable tools were once controversial but are now accepted, even in secured exams. GenAI can be approached in the same way: as a tool that professionals must learn to use responsibly and effectively.

GenAI as a reflection of collaborative professional practice

Assessment isn’t just an individual endeavour. Authentic group assessment often involves both individual accountability and group achievement, and in our field, reflects professional practice where engineers spend a significant portion of their time collaborating. Students need to show they can work independently but also increasingly how they can collaborate with AI, prompting it accurately, validating outputs, and integrating results into decision-making.

In this context, GenAI becomes a virtual teammate: powerful but limited, requiring oversight and judgment. Students must learn to manage their responsibilities, demonstrate their disciplinary knowledge and critically engage with AI contributions. In the era of AI, GenAI is always available as a virtual teammate, meaning that in principle every task has a group dimension. If human collaborators are absent, the GenAI teammate remains, making all tasks effectively group tasks.

A balanced approach to assessment

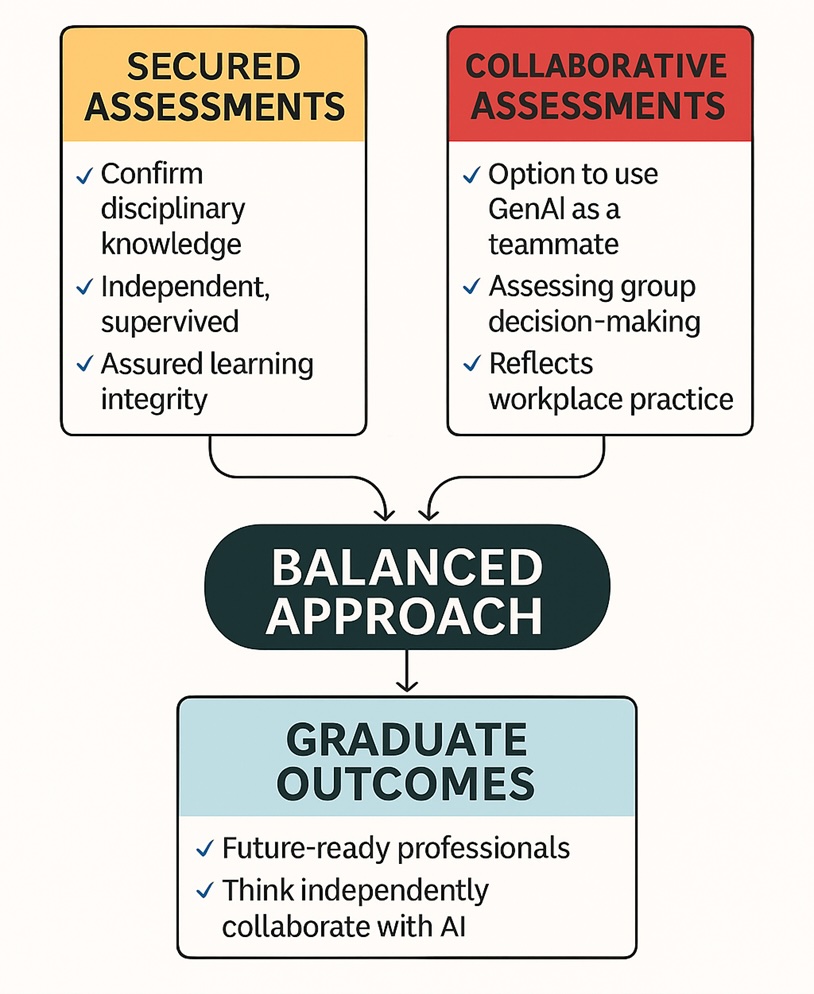

We propose a balanced approach that combines secured and collaborative assessments, aligning with the UTS ‘mosaic’ approach to assessment, which emphasises integrating diverse strategies into a cohesive and authentic whole.

1. Secured assessments for verifying disciplinary knowledge of individuals

These confirm disciplinary understanding and assure learning integrity. They may take place in individual or group contexts and provide assurance that students possess the required disciplinary knowledge, enabling validation of results, including those generated with GenAI. Examples include:

- Timed problem-solving exams applying concepts to new scenarios

- Short in-class written responses explaining or justifying ideas

- Laboratory experiments where students conduct experiments and interpret and report results

- Oral questioning or viva to probe understanding

2. Collaborative assessments with option of GenAI teammate

These tasks mirror professional practice, where much work is conducted in teams and success depends on both group processes and collective outcomes. Students may choose whether and how to use GenAI as a virtual teammate, but the focus is on assessing collaboration, team efficiency and group decision-making rather than just individual contributions. Transparency matters, which means documenting the role of GenAI, validating outputs and reflecting on how group processes were managed. Examples include:

- Project proposals – GenAI may help generate options; team must negotiate, refine, and justify recommendations

- Reports or analysis tasks – GenAI might support modelling or synthesis; the group collectively critiques results and ensures reliability

- Design or problem-solving activities – GenAI can offer alternatives; students must evaluate feasibility, ethics and impact as a team

- Group projects or presentations – where the assessment includes not only the final product but also how the team functioned, with GenAI considered one of the potential ‘teammates’

University courses also include Work Integrated Learning (WIL), which enables students to demonstrate professional competence, adaptability, and ethical judgment in real workplaces, bridging academic study and industry practice.

But is it GenAI-compatible?

Since much of the curriculum and assessment in universities were designed before the emergence of GenAI, transformation may now be necessary to ensure these are ‘GenAI-compatible’. The aim is not to train students merely in what GenAI is already good at, but to create opportunities for them to optimise, innovate and enhance productivity in ways that serve society.

Secured exams remain important, but they are only part of the picture. By combining secured assessments with collaborative, AI-enabled tasks, universities can prepare graduates for a future where humans and AI work together to achieve more than either could alone.

Postscript: our AI podcast partner

As part of our own professional exploration, we created a podcast version of the themes in this blog, as both a video and audio format. The audio podcast (listen on Sharepoint) was created using the NotebookLM application, based on the blog content and enhanced through prompt engineering techniques. The video podcast was then developed using the Clipchamp video editor, incorporating images generated with OpenAI GPT-5 with appropriate prompt engineering and the NotebookLM audio podcast. Have a listen, and see what you think of this AI collaboration!