“Can you explain what that involves before I agree?”

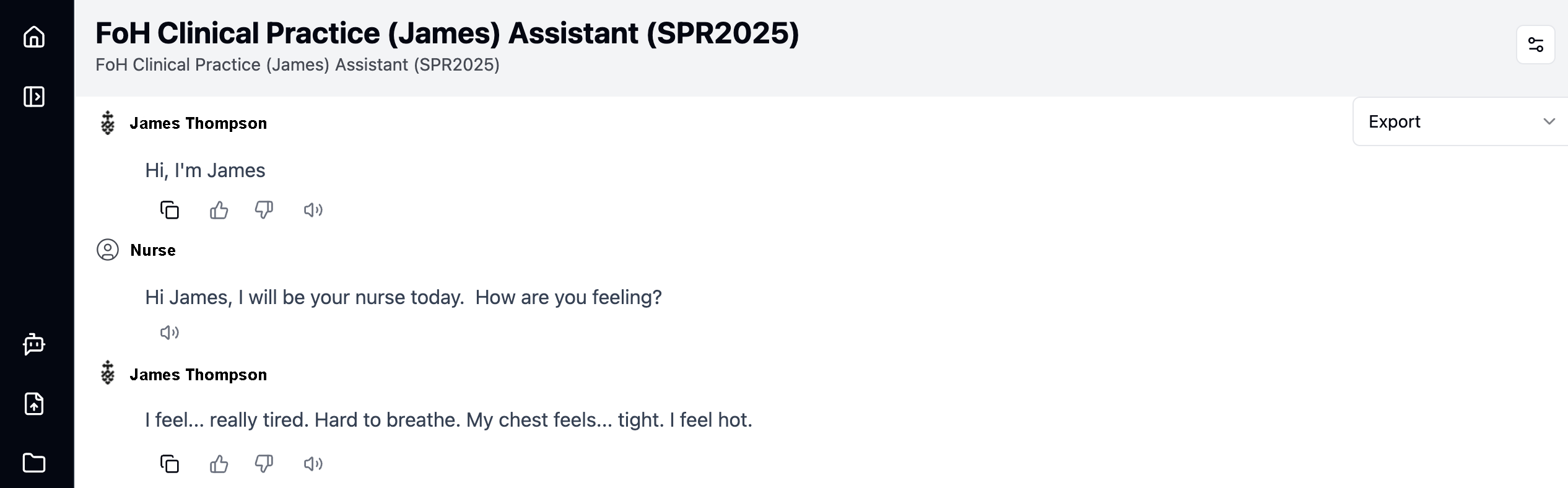

That was James, one of our AI-simulated patients, pushing back at a nursing student who had breezed in with “I’ll just take your blood pressure now.” James wasn’t having it – and that was the point.

Over the past few months, we embedded chatbots into Clinical Practice 2B, one of our largest Nursing subjects, as a way of simulating patient interactions. What started with one AI patient, James, soon grew to include Omar, plus a Feedback Assistant chatbot that analysed transcripts and offered students formative feedback.

It sounded straightforward on paper. In practice? It was a story of ethics approvals, endless testing, stubborn moderation filters, and 633 students all relying on the system to work at scale.

Fine-tuning our chatbots

Before exposing the whole cohort, we ran a smaller pilot trial. That’s where we discovered that words like blood and surgery – fairly standard in nursing – caused the chatbot to clam up. The system’s default moderation settings were so strict we had to repeatedly reset them just to let students talk about routine care. Issues like this aren’t unique to our context – others have noted the risks and opportunities of AI chatbots in nursing education.

We also learned what it meant to test live. Features that had worked for weeks could suddenly stop without explanation. It took many rounds of prompt engineering, resetting flows, and re-engineering responses before we had something stable enough to trust.

We deliberately made James and Omar more than text boxes with data. They were programmed to show personality and even emotion. If a student was respectful, explained clearly, and gained consent, the interaction flowed. But if they rushed, skipped explanations, or sounded dismissive, the chatbot became irritated or frustrated.

This was no gimmick. It modelled the real-world expectation of treating patients with empathy and respect. It also raised the stakes: students had to earn consent before performing tests. And when they did, the chatbot revealed its second role: producing test results. A typed “results” command triggered realistic clinical data – blood pressure, pulse, oxygen saturation – linking communication directly to clinical reasoning.

Training the trainers

Rolling this out wasn’t just about the students. We ran multiple training sessions for tutors, both in person and online, so they could:

- Practise with the chatbots themselves

- Learn how to supervise assessments

- Know how to guide students through technical hiccups

One session made the risks clear. A dozen of us logged into Recast at once, and the whole system froze. Up popped a message: too many users, actions can’t be completed. Everyone just stared at the screen. It took the IT team some deep digging to fix this before the actual assessment. It was a reminder that in innovation, the unexpected is always waiting.

Students, meanwhile, were introduced to Omar and the Feedback Assistant early and given a week to practise at home. They were asked to bring their own headphones so they could hear the chatbot speak, but responses had to be typed unless there was a clear need for voice.

Assessment by design

The assessment came in two parts:

- Part A (in-lab): a 30-minute chatbot interaction, uploaded immediately as a PDF transcript. (Even though the system offered CSV, TXT, and PDF, we locked it to PDF only – no chance to edit the dialogue after the fact.)

- Part B (post-lab): a 500-word reflection, plus documentation like SAGO charts and nursing diagnoses, submitted a few days later.

This was aligned with the Clinical Reasoning Cycle developed by Levett-Jones et al. (2009), ensuring the chatbot interaction linked directly to professional nursing standards.

Before any of this, students had to sign a Chatbot Assessment Declaration, confirming their work was their own and acknowledging staff might review their transcripts, in line with the UTS Academic Integrity Policy (and the Student Rules, Section 16).

Lessons learned

This was a project built on incremental innovation at scale. It required countless meetings, tutor briefings, and chatbot testing sessions. And it wasn’t always pretty.

Features broke, privacy settings had to be reset, and testing seemed endless. But the payoff was worth it. Students got a safe but realistic space to practise clinical reasoning, empathy, and communication. Tutors had a structured assessment process. And I, as a learning designer, walked away with three big reminders I’d share with any colleague thinking about bringing AI into assessment:

- Design for people, not just platforms. The technology will break at the worst possible moment – what matters is the support and scaffolding you build around it.

- Treat innovation as iterative. You won’t get it perfect the first time, and that’s okay. The design process is about adjusting, testing, and adjusting again.

- Remember your role. Learning designers aren’t just technicians. We’re the translators, the ones holding the tension between pedagogy, technology, and student experience.

The principles underlying this project echo sector-wide conversations, such as Jisc’s targets for the future of assessment and EDUCAUSE’s guidance on integrating generative AI into teaching and learning.

That’s a message for anyone in higher education: AI in assessment design isn’t a shortcut or a spectacle. It’s a practice of weaving technology into learning in ways that are human, thoughtful, and grounded in clear outcomes.

This work was part of an FFYE 2025 grant project, AI-enhanced virtual case study. The project was guided by Tran Dinh-Le and supported by Kathryn Fogarty and her team, whose technical expertise ensured the system could run at scale. Many others contributed, particularly through extensive testing and refinement, making the project a true collaborative effort.